The Digital Transformation of Holocaust Testimony Archives

25 April 2024

Preserving and accessing Holocaust testimonies is crucial in today’s world. As misinformation and historical revisionism continue to increase, archives play an even more essential role. Therefore, Yale’s Fortunoff Video Archive for Holocaust Testimonies serves as a beacon, reshaping how we engage with survivors’ stories. This blog covers the archive’s evolution, challenges, and significance, highlighting the vital efforts made to safeguard these memories for future generations.

The Origins of the Fortunoff Video Archive

The Fortunoff Video Archive began in New Haven in 1979 as a grassroots initiative. Survivors and their families founded it to record and preserve Holocaust oral histories. This effort aimed to ensure that survivors’ voices would never be forgotten.

Initially, the archive operated as a nonprofit, using analog video equipment to record testimonies. Survivors often participated as both interviewers and interviewees, thereby creating an intimate setting. As the project gained support and funding, it eventually became affiliated with Yale University in 1981.

Transitioning to the Digital Age

Digital technology sparked a major transformation for the archive. Between 2010 and 2015, it transitioned from analog to digital formats, digitizing over 10,000 hours of testimony. This process required meticulous planning and skilled technicians to maintain the recordings’ original quality.

Notably, Frank Clifford, a dedicated video engineer, played a pivotal role in this transition. His expertise ensured that the digitization maintained the authenticity of the archive’s materials, allowing the archive to move seamlessly into the digital age.

Enhancing Accessibility Through Technology

Moreover, the Fortunoff Archive embraced technology to improve accessibility. The development of the Aviary platform marked a key milestone, enabling users to search and access testimonies online. This platform uses advanced indexing systems, which help users navigate the extensive collection efficiently.

In addition to video testimonies, the archive has also developed transcripts and indexes for research purposes. Although these indexes were originally handwritten, they have since been digitized, synchronizing with the video content and aiding researchers in locating specific topics within testimonies.

Ethical Considerations in Archival Practices

Importantly, the archive operates with strong ethical guidelines, always prioritizing survivors’ well-being. Each testimony includes a release form, giving survivors control over their narratives. This ethical focus extends to how access is managed, ensuring the archive remains sensitive to the subject matter.

Furthermore, over 200 affiliated institutions worldwide now offer access, enabling researchers to engage with testimonies while maintaining survivor confidentiality. This approach reflects the archive’s deep respect for the individuals whose stories are being preserved.

Engaging the Public: Outreach and Education

In addition to preservation, the Fortunoff Archive actively engages the public through various initiatives. For instance, the podcast “Those Who Were There” shares testimonies in an engaging audio format, making survivor stories more accessible to a broader audience.

The archive also offers educational programs, film series, and fellowships. As a result, these initiatives promote a deeper understanding of the Holocaust, encouraging empathy and awareness among future generations.

Challenges in the Digital Landscape

While the digital transformation has increased accessibility, it has also brought challenges. Misinformation and Holocaust denial, for example, threaten historical narratives. Consequently, as digital manipulation becomes more prevalent, verifying sources has become more important than ever.

The archive also faces challenges related to copyright and ownership, ensuring survivor rights are protected. Therefore, balancing accessibility with ethical responsibility remains a central concern.

The Future of Holocaust Testimony Archives

Looking ahead, the future of the Fortunoff Archive lies in collaboration and innovation. As technology continues to advance, integrating Holocaust testimony collections across platforms becomes possible. Consequently, efforts to create a centralized platform will enhance research and collaboration among institutions.

Furthermore, as fewer witnesses remain, preserving these testimonies becomes even more urgent. The Fortunoff Archive remains dedicated to ensuring survivors’ voices are heard in our collective memory.

Conclusion: The Importance of Remembering

In conclusion, the Fortunoff Video Archive’s work is vital in preserving survivors’ stories from one of history’s darkest times. By combining technology, ethical practices, and public engagement, the archive honors these memories. As we look ahead, it is our responsibility to carry these stories with us, ensuring the past’s lessons continue to guide our present and future actions.

Transcript

Chris Lacinak: 00:00

Hello, thanks so much for joining me on the DAM Right Podcast.

To set up our guest today, I want to first set the stage with two important items.

I founded AVP back in: 2006

Actually April 21st was our 18th year anniversary, so happy birthday to AVP.

Anyhow over the past 18 years, I’ve had the privilege of working across a number of verticals.

Anyone who has worked in a number of places within their career will know that one of the big and important parts of onboarding and becoming a productive part of a new company is learning and using the terminology.

Each organization has its unique terms and the distinct way that they use those terms.

So you’ll understand when I say that the thing that has differed the most in working across verticals has been the terminology.

Our corporate clients talk about DAM, our libraries and archives clients talk about digital preservation, our government clients talk about digital collection management, and so on.

In truth, there is a great deal of overlap in the skills and expertise necessary to effectively tackle any of these domains.

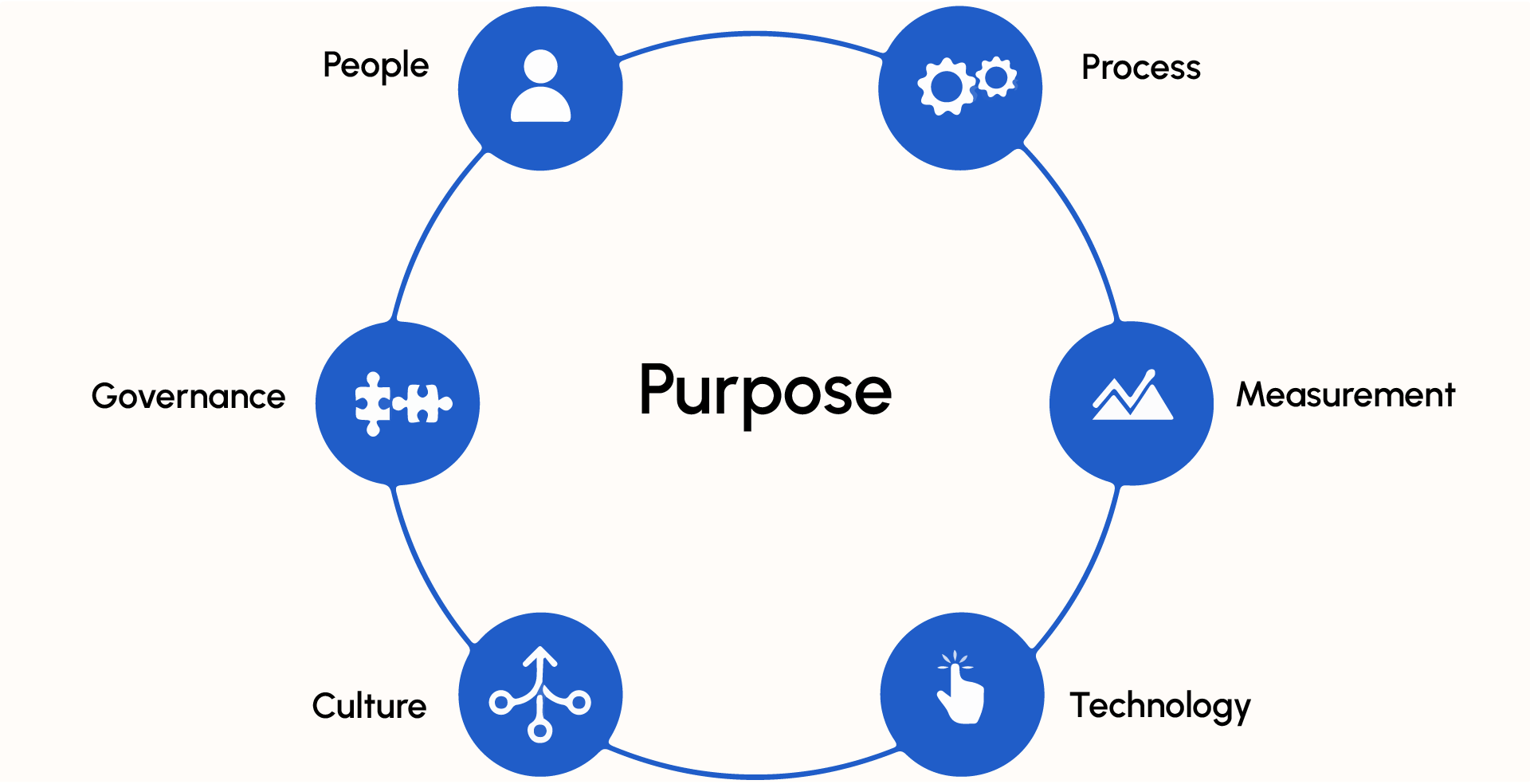

Of course, there is nuance that is important and distinct, which is mostly about understanding purpose, mission, context, and history.

This is akin to learning the terminology of a given workplace and coming to understand the things that make each workplace unique.

Like anywhere, the use of a terminology is a signal to people about which tribe you are part of.

Just as words have meaning, how you use those words has meaning.

For years, this reality has caused a great deal of consternation for us at AVP.

Why?

Because we have always worked with an array of customers, we have always had to make sure to be careful and precise in our use of terminology.

With an individual customer, this is easy.

With a website, this is very difficult.

On a website, you have to choose the terms that will resonate with your target audience and have them know that when they land on their page, they are with their people.

We didn’t want people who talk about DAM to see us talking about collection management and vice versa, thinking that they were not with their people.

But in wanting to avoid offending anyone, we failed to talk effectively to everyone.

In: 2021

Since then, I’ve been relieved to find that 1) we have offended very few of them, and 2) these verticals have also started to embrace the term digital asset management themselves.

Even more, these verticals have started to embrace technologies that use the DAM label.

And conversely, technologies that use the DAM label have started to represent the interests and needs of people who consider themselves to practice digital collection management and digital preservation.

I say all this as a backdrop because the focus of today’s episode is on an archive of video Holocaust testimonies.

It almost feels wrong to refer to these testimonies as “digital assets.”

But even though my guest does not use any technology that refers to itself as a DAM, the practices and skills that are used are digital asset management practices and skills.

A common refrain for digital assets is that they are not digital assets until you have the rights and the metadata to be able to find them, use them, and derive value from them.

Historically, in the distinctions that have existed between the use of the terms digital asset management and digital collection management, one of them is the definition of value.

In DAM conferences 20 years ago, if you talked about digital assets and value, you could be certain that 90% or more of the people in the room were thinking dollar signs.

And if you were at an archive conference and you talked about digital collection management and value, you could be certain that 90% or more of the people in the room were thinking of cultural and historical value.

And while I think this is becoming less true over time, it feels important to say that in this podcast episode, and in the podcast in general, when we talk about digital assets and their value, that we mean any and all of the above.

It is very true to say that a file without rights and metadata has no value of any sort financially, culturally, historically, or otherwise.

If you cannot find it, if you cannot use it, it has no value.

So in this episode, I want to ensure our listeners that there was a great deal of meaning and relevancy in calling these Holocaust testimonies digital assets.

They are truly assets that have a great deal of value in the most holistic and meaningful of ways.

Having said that, and with the Holocaust Remembrance Day coming up on May 6th, I am privileged to have the Director of Yale’s Fortunoff Video Archive for Holocaust Testimony, Stephen Naron with me today.

Prior to becoming the Director, Stephen was an employee at the Fortunoff Archive where he worked extensively on this collection of materials and helped guide it into the digital age.

Since becoming the Director of the Fortunoff Archive, Stephen has been prolific and innovative in his work to make these testimonies available to the public and to proactively use the materials in the archive to create compelling experiences for people to discover and engage with these testimonies.

This has included collaborating on the development of a software platform, launching a podcast, releasing an album, running a fellowship program, and running both a speaker and a film series.

And that’s not even all of it.

I’m so thrilled to have Stephen Naron on the DAM Right Podcast with me today and to introduce him to the DAM Right audience.

Remember, DAM Right, because it’s too important to get wrong.

Stephen Naron, welcome to the DAM Right Podcast.

I’m super excited to have you today.

Very glad to be talking with you about all kinds of topics around DAM and this amazing collection and archive that you’re the Director of.

Thank you for joining me.

Stephen Naron: 05:40

Oh, it’s a pleasure to be here, Chris.

Thanks.

Chris Lacinak: 05:42

I wonder if we could start with you just giving us a background about your background, your history and kind of how you came to be where you are today.

Stephen Naron: 05:51

imonies, on and off now since: 2003

So it really was my first professional job as a librarian and archivist.

But obviously, I’ve always had a deep interest in Jewish history and Jewish culture and Jewish languages.

And I studied abroad, learned Hebrew and Yiddish and German.

And while I was in Germany as a graduate student, I was lucky enough to get a position in an archive at the Centrum Judaicum as a student worker.

And it was the [speaking in foreign language] and this is a sort of general archive for all of the Jewish communities in Germany.

And I worked with that collection for over a year as a student worker.

And that’s when I really was bitten with this sort of bug, this interest in archives in general.

And so that’s when I decided to sort of turn towards the field of archives and libraries.

And when I got my degree, I focused on archives in UT and Austin, which was a great program.

I learned a lot.

And then right out of library school, I found the position at the Fortunoff Video Archive.

And so it really was the first professional experience I had.

And I just loved working with this collection.

It’s a collection that’s exclusively audio visual testimonies of Holocaust survivors and witnesses of the Holocaust.

And yeah, so that’s a little bit about my academic background and how I became interested in working in particular with audio visual collections.

Chris Lacinak: 07:45

Wow.

So you’ve been at the archive for quite a while now.

When was that that you started there?

Stephen Naron: 07:51

In: 2003

And then I moved to Europe with my wife and we were in Sweden.

f years and then came back in: 2015

Fortunoff Video Archive from: 1984

And so I had a wonderful opportunity to mentor, to have her as a mentor and learn really from the individuals who helped build the collection over the last 45 years.

Chris Lacinak: 08:46

And has the archive always been under the auspices of Yale University or did it start independent from Yale?

Stephen Naron: 08:53

Well, that’s one of the most interesting things about this collection is that it actually started in New Haven as a grassroots effort of volunteers and children of survivors, survivors, fellow travelers who formed a nonprofit organization in New Haven to record testimonies of Holocaust survivors and witnesses.

o it didn’t come, that was in: 1979

first tapings were in May of: 1979

And it really was very much an effort from the ground up.

Survivors were in the leadership of the organization, the nonprofit, president of the nonprofit was a man named William Rosenberg, who was a survivor from Częstochowa, Poland.

Survivors would hold meetings in their homes to organize the tapings.

They’d fund the rental of what was at the time quite expensive video equipment to do this professional broadcast, professional standard recordings.

And of course, survivors served as interviewers and as interviewees.

So they were on both sides of the camera.

And so that’s in the early days, ’79 starts.

survivors who was recorded in: 1979

And Renee happened to be married to a professor at Yale, Geoffrey Hartman, who was a professor of comparative literature.

And so Geoffrey became involved in this sort of local project, community project, very early on.

And he, as an academic, knew how to write grants.

And so he wrote a number of successful grants to help increase the funding of the project.

And he was then really responsible for bringing the collection and giving it a permanent home at Yale.

o it was deposited at Yale in: 1981

And at that time, there were about 183 testimonies that had been recorded by the Video Archive’s predecessor organization.

This organization was called the Holocaust Survivors Film Project.

So this project then became the Video Archive for Holocaust Testimonies.

And there were about 182 testimonies at the time, and it’s now grown to over 4,300 testimonies.

It’s 10,000, more than 10,000 hours of recorded material.

It was recorded in North America, South America, across Europe, in Israel, in over 20 different languages, in over a dozen different countries, with the help of what we call affiliated projects, which are independent projects that form a collaborative agreement with the Fortunoff Video Archive.

And so it has just grown exponentially.

And ever since ’82, we’ve been serving the research community.

They come to Yale, use the collection there, hundreds of researchers every year.

And then in about: 2016

And so these access sites are all over the world.

There are over 200 of them.

And usually institutions of higher learning or research institutes.

So the collection has been, not only has it, did it grow from a small grassroots effort into a sort of a global documentation project, but it’s now readily accessible all over the world.

Chris Lacinak: 12:49

You’ve hinted at several things that I just want to kind of put on the table so listeners understand, but the Fortunoff Video Archive for Holocaust Testimonies is all video recordings.

Is that right?

Stephen Naron: 13:01

Yeah, right.

It’s exclusively video recordings.

And in fact, it was this HSFP, the Holocaust Survivors Film Project, was the first project of its kind to begin recording video interviews with survivors on any sort of extended basis.

So we really are the first sustained project of its kind.

And by sustained, I mean really sustained.

our most recent interview in: 2023

So we’re talking about over 40, almost 45 years of documentation.

And so that provides quite a unique longitudinal perspective of this whole genre of Holocaust testimony.

There’ve been lots of many, there’ve been many other projects that followed in our wake.

But most of them rise and fall fairly quickly.

This is a project that’s really withstood the sort of test of time.

rs, who were recording in the: 1980

So when we get a call from a survivor who wants to give testimony and who hasn’t given testimony before, we pull in some of the most experienced interviewers there are who have done this type of work.

Chris Lacinak: 14:29

You mentioned that these were originally recorded, many of them, you’re still recording them, so you’re not recording them on analog videotape today.

But originally they were recorded on what was considered broadcast quality analog videotape.

You talked about there being a digitization process of everything in your collection, I believe at some point along the way.

Could you just tell us about like, what are some of the other, I assume there’s transcripts and other aspects.

Can you tell us a little bit about just what does the collection look like and kind of what are some of the salient steps that you’ve taken to make it usable, preservable, accessible?

Stephen Naron: 15:07

There is a story there.

Because this archive has had such a long history, it’s gone through, and it’s from the very beginning been an archive that is, let’s say, I don’t want to say groundbreaking, but certainly forward thinking in its use of technology from the very beginning.

Just the embrace of broadcast video alone was sort of at the time a revolutionary step.

But beyond that, the Fortunoff Video Archive has always been sort of a step ahead, at least in the larger library system at Yale, in thinking about how to make the collection accessible, embracing digital tools, cataloging through Arlen and other sort of central online searchable databases.

We were one of the first collections on campus, if not the first collection on campus, to have its own website.

So we’ve always embraced technology, at least for the benefits that it can bring in terms of making this collection more accessible and more available to the research community.

But as far as what other content or what other layers of information that we’ve had to sort of transform from an analog to a digital world, yeah, we’ve had the videos themselves.

And that took over five years, where we had an incredible video engineer named Frank Clifford, who used to work at Yale Broadcast, who then came over to the Fortunoff Video Archive and by hand, using SAMMA Solos and a fleet of U-Matic and Betacam decks, digitized all 10,000+ hours of video in real time, day after day after day for years.

Sadly, he passed away.

But really, he did just an incredible work.

And as you know, as someone who’s worked hands-on with analog legacy video, he kept those machines running by all means necessary.

h shedding tape that was from: 1979

And so, that’s just one step, right?

But then we have all these analog indexes that were handwritten, handwritten notes that describe the content of each interview that then became typed indexes.

And those indexes were in WordPerfect and various versions of Word and OpenOffice.

And so, we have this whole other effort of standardizing and migrating the indexes from one format to another.

We eventually moved everything into OHMS.

So now, all those indexes have been OHMSed, and we’ve connected, of course, the OHMS indexes with the video.

And so, that was a huge effort.

Chris Lacinak: 18:21

Let me stop you just for a second, because I think there’s probably many people that don’t know what indexes are, or at least how you define them, and OHMS.

So maybe let’s just drill down a little bit on that.

What’s an index?

What’s it look like?

How does it work?

And what is OHMS?

Stephen Naron: 18:36

Okay.

So, the indexes are a little idiosyncratic for us, right?

So, we call them indexes.

We used to call them finding aids, which is a lot more in tune with the kind of archival world.

But they weren’t really finding aids per se, either, although they did allow us to find things.

What they were are detailed notes in the first person in English, regardless of what the language of the testimony is.

So, first person notes written by students who had the native language of the testimony they were watching, and they’re very, very summarized.

So, they read kind of like transcripts, but they aren’t transcripts.

They’re not word for word.

The goal was to capture the most salient details of the testimony in as terse a form as possible.

And every five minutes, the student would put a time code from the video, a visible time code, so that researchers could then use these indexes or notes or finding aids to find specific speech events in the testimony.

This was long before you had SRT and WebVTT kind of transcripts, right?

You’d use this paper.

So, they’d get this paper indexed.

They’d take it with them.

They’d have the video, VHS use copy, sitting in manuscripts and archives in Sterling Memorial Library, and they’d be looking through the notes and trying to find the section of the testimony that was most relevant for their research.

And so, those notes exist, those indexes, those notes, those finding aids, they exist in a number of different forms.

And even more confounding, the notes, the indexes were created from the use copies, and the use copies had visible time code, and that visible time code did not refer, was not the same time code as the original master tapes, because the VHS use copies, of course, don’t start and stop at the same time as the master tapes.

So, there was this discrepancy between the time code on the notes and the time code on the master tapes, so we couldn’t use the indexes properly with the digital master videos.

So, that’s why we sort of came up, and there was no like programmatic way to just mathematically transform the index timing to the master tape timing.

So, that’s when we found OHMS, and we saw that OHMS was just a sort of ideal system where you could synchronize, and OHMS stands for Oral History Metadata Synchronizer, and you could use OHMS, it was a free tool, it is a free tool that you can use to synchronize text-based data, so indexes, finding aids, transcripts with the digital audio or video.

And so, we did that with the entire collection, which also took us years, but now we have all the indexes are searchable and full-text searches in Aviary, which allows researchers enormous amount of flexibility in terms of locating specific topics and events within a testimony or across all the testimonies.

Chris Lacinak: 21:40

And you created indexes which were not transcripts, was that because of the amount of time it took, was that because that’s what current best practice was?

Why did you take that route instead of transcripts at the time?

Stephen Naron: 21:53

That’s also a really good question.

Well, actually, there is a practical side, it simply was too time-consuming and expensive to create full transcripts, and this is a collection that really grew very slowly and has had limited resources its entire existence, so we had to be cautious about where we sort of put our resources.

And so, these indexes seemed like the quickest, most cost-effective way to gain intellectual access to the collection.

And the archivists used these indexes then to create catalog records, regular old MARC catalog records, almost like every testimony was cataloged, almost like a book.

And you could then search across those catalog records.

But beyond the practical side, there was also an ethical and I think intellectual reason not to go the path of transcripts.

One was that no transcript, no textual transcript can truly capture the richness of an audio-visual document.

You cannot capture gestures, you cannot capture tone, you cannot capture pauses that are very meaningful in a recording like a video testimony of a survivor, the look in the eyes.

I mean, these are things that cannot adequately be captured in a transcript.

And so, the thought was if you can’t make an accurate transcript, we have to really push the viewer to watch the recording.

And again, that’s also part of the ethos of the archive is that we want you to watch.

We want you to witness the witness, right?

We want you to be present, entirely present.

And if you provide transcripts to researchers, as we all know, the researchers will go straight to the transcripts and use the transcripts and might not even watch the video.

And that’s big, you know, some researchers are lazy like that.

But we felt that that was an ethically unsound use of video testimony.

And so, we really want to, we sort of pressure, let’s say, or coerce the researcher to watch the video and to watch the video in its entirety.

And I think that’s an obligation.

There’s an ethical obligation there that needs to be followed.

Chris Lacinak: 24:28

Yeah, that’s really interesting.

So, you didn’t want to mediate, it sounds like, you didn’t want there to be a mediation between the person that was watching or using these materials and the original testimony.

That’s super interesting.

It makes a lot of sense.

Stephen Naron: 24:40

Well, I mean, it also, it does make sense because, I mean, think about it.

If you read a transcript and it’s read by the, and it’s spoken by the survivor with an ironic tone of voice, how are you supposed to understand that there’s irony or sarcasm in a transcript?

You have to listen and watch in order to truly grasp what’s happening.

Otherwise, researchers will quite simply make mistakes.

They will misquote and misinterpret.

Chris Lacinak: 25:09

So I sidetracked you there.

You were kind of on a path talking about the various elements that you have in the archive.

You were talking about indexes and ohms when I stopped you.

And were there other things that you wanted to talk about there?

Stephen Naron: 25:22

Well, there are a couple of other things that are interesting and we’re still trying to figure out how to integrate them.

One is we conducted something called a pre-interview.

All of the testimony, so the process that we follow when we record testimonies is that there’s contact with the survivor several weeks or a week before the interview and the interviewers who are going to be at the session call, one of them at least, calls the witness and informs them about how it’s going to work, that it’s a very open-ended interview process, that they’re going to introduce themselves at the beginning and they’re going to tell us their, you know, start from their earliest childhood memories all the way up to the present, there aren’t set questions.

But they then also ask them a series of questions, mostly biographical questions.

Where were you born?

When were you born?

What did your parents do?

Did you have any siblings?

And so they gather all this information prior to the actual interview so that they can then go back to the library and do research about this person’s life.

So the town they’re from, learning about the town they’re from, learning about the camps and the ghettos that they might have been in, you know, really diving into this person’s life so that when they show up in the actual recording, the interviewers are already well informed about this person’s life.

They know the names of the siblings and the parents and what they did and they don’t have to ask these questions because they know it.

And then they can just serve as sort of guides or assist the witness as they really tell their life story in as open a manner as possible.

So those pre-interview forms are really interesting.

Also because the interview, once they get into the recording studio, there’s a lot of unknowns.

So sometimes the information that’s on the pre-interview doesn’t make it into the interview because the interview has a kind of life of its own.

But we need to find a way to make the data in those pre-interview forms more accessible to the researchers because there’s some interesting information there.

And then the other piece is we’re creating transcripts now.

So as I mentioned, those indexes, those finding aids, they’re always in English no matter what the original language is, which can be really frustrating for researchers who know these languages and then have to search in English, let’s say, to find information in a Slovak testimony or a Hebrew testimony or a Yiddish testimony.

So we’re now in the process of transcribing the entire collection in the original languages so that native speakers and researchers can search across testimonies in their language, which is in a way a compromise and a move away from what I said earlier about, you know, we want to, if we provide transcripts, then the risk is that people will just use the transcripts and not to watch the video.

But we felt this was a necessary step in this day and age to provide further intellectual access.

Chris Lacinak: 28:39

Well, it also seems that there’s been a major technological leap, whereas today I know the way that you provide access to transcripts is synchronized with the testimony.

So I mean, that’s a very different experience than maybe 15 years ago where someone would have just gotten that transcript and may have never watched the testimony, right?

That seems like that’s a very different experience and stays true to what you said about why it was important not to do that at the time.

Stephen Naron: 29:05

Yeah, absolutely.

And also think about, we’ve also been approaching transcription with another, you know, another motivation.

And that is that obviously people who are hearing impaired can’t take advantage of an audio visual testimony in the same way that a hearing person is.

So to be able to provide the transcript and subtitles for testimonies is also really valuable.

The other thing is even many of these testimonies can be extremely difficult to understand because of the survivors often are speaking in a language that isn’t their native tongue.

And so there’s a lot of heavily accented testimonies.

And so having transcripts and subtitles, transcripts as subtitles can be really valuable for everyone.

Chris Lacinak: 29:56

Speaking of the technological leap, some of the things you were talking about, right?

Writing indexes down on paper, pre-digitization was videotaped.

When did you do the digitization work again?

What year or years?

Stephen Naron: 30:07

So I would say: 2010

And we still, you know, even when we launched Aviary, the vast majority of the digital, the digitization work had been done by the time we were able to launch Aviary and make the testimonies accessible at access sites.

Chris Lacinak: 30:28

Two points about that.

One is it sounds so archaic this day and age, right?

Writing indexes down on paper.

And I believe there’s probably many modern practitioners that think that that sounds absurd.

But two points, one, that wasn’t that long ago and that was not unstandard.

That was pretty typical of what you’d find in a lot of people that were managing collections, especially of analog materials.

Two, just as an insight into, you know, your one archive out of many archives in the world and just to think about how many people haven’t done what you’ve done, which is the digitization work, the transcription work, the, you know, you’ve, as you said, you’ve embraced technology and that’s not to put anybody down that hasn’t.

It’s just to kind of get a moment, a glimpse into how many things that are out in the world that were created not that long ago and for decades prior that still may be not accessible in some way.

Stephen Naron: 31:33

Yeah.

And I mean, also like if you think about a traditional, you know, many traditional oral history, oral history projects, they would often record on tape or video and then create the transcript and then hand the transcript to the interviewee who would then, you know, sign that this transcript is an accurate depiction of my statement, right?

And then they’d actually get rid of the original tapes because the transcript then becomes kind of the document.

So yeah, that’s, we’re very different.

We’ve approached this very differently than a lot of oral history projects.

And yeah, absolutely, we’re really lucky that this collection, as I said, it, you know, it’s still a very small in terms of human resources who work with this collection, but, you know, we’ve been lucky to have the longevity that we have and to have the support from Yale University Library that really allows us to focus just exclusively on this collection, right?

So from the beginning, there has been this laser focus on making this as intellectually accessible and usable and standard, right?

So we’ve used, you know, standard library and archival practice to make this collection accessible using, you know, terminologies and taxonomies like Library of Congress subject headings and things like that, that make it very easy to share our metadata with others to search across collections.

And so yeah, I think we’ve been very lucky to be a part of a research library from the very beginning, which helped us to go down that path of description and description upon description upon description.

Chris Lacinak: 33:22

Thanks for listening to the DAM Right podcast.

If you have ideas on topics you want to hear about, people you’d like to hear interviewed or events that you’d like to see covered, drop us a line at [email protected] and let us know.

We would love your feedback.

Speaking of feedback, please give us a rating on your platform of choice.

And while you’re at it, make sure that you don’t miss an episode by following or subscribing.

You can also stay up to date with me and the DAM Right podcast by following me on LinkedIn at linkedin.com/in/clacinak.

And finally, go and find some really amazing and free resources from the best DAM consultants in the business at weareavp.com/free-resources.

You’ll find things like our DAM Strategy Canvas, DAM Health Scorecard, and the Get Your DAM Budget slide deck template.

Each resource has a free accompanying guide to help you put it to use.

So go and get them now.

And I guess you also have the benefit, although the archive is large in absolute terms and relative terms, it’s fairly small.

So that gives you an advantage to be able to really dive deep and do a lot of great work around, you know, compared to an archive that might have hundreds of thousands of recordings or millions of recordings.

Stephen Naron: 34:35

Yes, for sure.

Chris Lacinak: 34:37

The Fortunoff Video Archive for Holocaust Testimonies is not the only archive of Holocaust testimonies in the country or in the world.

And each of those have had to make decisions about where, when, how to give access.

And my understanding is that different decisions have been made about how to provide access to testimonies.

I wonder if you could just give us a sense of the, what’s the landscape?

You know, are there a few or are there dozens of archives of Holocaust testimonies?

And help us understand what some of the, and I’m not trying to, you know, I’m not trying to say that anybody’s right or wrong or anything like that, but just understand some of the considerations about that these archives have had to navigate in thinking about how to provide access to Holocaust testimonies.

Stephen Naron: 35:29

There are many, many collections all over the world.

after us, after we started in: 1979

And they do have indeed very different approaches to making the collections accessible.

, we have to remember that in: 1979

sn’t really established until: 1993

And prior to that, there weren’t a lot of other organizations doing this work in the United States or in North America.

But the US Holocaust Memorial Museum is a national institution.

It’s a government-funded institution.

And so the materials that they create, the testimonies that they’ve created and collected over the years, they have been given a very broad, sort of broad permission to make those as accessible as possible.

And I think that’s in part because they see their mission as a sort of general, you know, educational effort, right?

The general public to educate the American people about the history of the Holocaust.

In order to do that best, they have to make their sources as accessible as possible.

That includes testimony.

So their testimonies, of which they have thousands, are all digitized and accessible in their collection search online.

So there really are no barriers at all to the average citizen researcher who wants to go in and watch as much unedited testimony as he or she desires.

So that’s a very open model.

And I think it has a lot of, there’s a lot of benefits to that.

I do sometimes wonder how much of the general public is really interested in watching an unedited 10-hour testimony of a Holocaust survivor, how much of that they really, how many really do that.

But for the average, for the research community, certainly it’s an enormous advantage.

There are other institutions that on a national level, like Yad Vashem in Israel, Yad Vashem has an enormous collection of testimonies, both that they’ve created themselves and that they’ve collected over the years.

Some of those, many of those are available online, but many, I would say the vast majority, are only available to researchers who are then on site.

So they have a slightly more restrictive approach.

But their aim has been to collect as much of the source material as possible, either in original form or as digital copies.

So they’re a little bit more restrictive in a sense.

And then you have another major collection, the USC Shoah Foundation, which was started by Steven Spielberg after the release of Schindler’s List in ’94.

And he and his organization, the organization that grew out of this initial impulse, collected something like 50,000 testimonies of survivors, but in a very short period of time, so I think about less than 10 years.

And they’re now at USC, but they weren’t at USC originally.

They were on the Universal Studios backlot, I think.

And so they had a very different approach to this work, almost outside of the traditional world of academia and libraries.

And for a long time, their collection was only accessible through, and it still is for the most part, only accessible through subscription, a subscription model.

And so they became, they have this enormous, incredible collection, but it’s only accessible to at universities and research centers that have the resources to pay for that subscription fee.

And so that’s another model that is a little bit more restrictive.

At the same time, they have free tools for high schools and for educational use, something called Eyewitness that has something like 3,000 unedited testimonies that are openly available.

So, they still provide thousands of complete unedited testimonies, but the vast majority of the collection is behind a paywall.

And then you see the other Fortunoff Video Archive, which has digitized its entire collection now.

But for decades, its collection was only accessible at Sterling Memorial Library in the Manuscripts and Archives Department in the reading room at Yale University.

So you’d have to make the pilgrimage to New Haven to work with this material.

And so that’s also in a sense, very restrictive.

Not everyone can afford, not all researchers can afford to make the trip to New Haven to do that type of work.

But there’s no costs involved with using the collection.

So in a sense, it’s open to everyone.

And that’s how it worked at Yale.

And so that was a bit restrictive.

But now we’ve also opened up now, now that the collection is digital, and making it available at these access sites, I already said more than 200 of them, but still, it’s not like we’ve thrown it all up online like US Holocaust Memorial Museum.

It’s still kind of like a closed fist that’s kind of slowly opening, right?

And it’s only accessible at these access sites.

It’s free, so the access sites don’t have to pay a subscription fee, but they still have to sign a memorandum of agreement with us.

It’s only accessible on IP ranges that are associated with those institutions, so at various universities and research centers.

So there’s still a certain amount of restriction on who can see it when and where.

And we just have a very different model.

And that model of how to use a collection like this comes from, I think, the fact that we were started by survivors themselves and children of survivors.

This organization from the very beginning was very concerned about the well-being of the survivors before, during, and after the interview has been given.

All of the witnesses sign release forms, and in these release forms, it clearly states that Yale University owns copyright to the recording.

We can do, theoretically, legally, whatever we’d like, but that doesn’t mean we should.

And there was always a sense that the survivors, although they quite clearly wanted to share their story with us and in a very public manner by giving testimony, they still deserve some modicum of privacy and anonymity.

And so we’ve been fairly restrictive in terms of not making it widely accessible online.

etera, but could survivors in: 1981

That’s what the internet is.

And that feels like a step too far without any kind of mediation for us.

Chris Lacinak: 43:53

You also talked about 200 access sites.

Could you tell us what are those?

Who are they?

How do they work?

What does that look like?

Stephen Naron: 44:01

Yeah.

I mean, I did want to say actually something else about some of the ways in which we, the collective places certain restrictions on access that might seem a little strange or idiosyncratic.

Another example that I forgot to mention was, yeah, so things are slightly locked down in a sense they’re only available at access site.

But another thing that’s really unusual about this collection is we also truncate the last names of the survivors.

So if you were to search the metadata, if you were to go to Aviary and search, you would see very quickly that the testimonies are, the titles of the testimonies are, you know, Stephen N.

Holocaust Testimony, Chris L.

Holocaust Testimony.

The last names are hidden from view.

And obviously once you’re at an access site and you’re watching the testimony and the person introduces themselves, you hear their name, you hear their last name.

And in the transcript, if they say their last name, it’s transcribed there, but you don’t see the transcript unless you’re at an access site either.

And the reason behind this was in the early days, one of the survivors full name appeared in a documentary film that was screened on television.

And the survivor received threatening phone calls after the film was screened.

And after that, they decided that this was a risk that they were unwilling to take and push to truncate the last names in order to protect the survivor’s anonymity.

Of course, if you do research, it’s not foolproof.

If you make the effort to come and do the research, you can find out all this information, personal information.

But the idea was to provide some basic hurdle that would provide some protection.

And as you can imagine, that’s served its purpose well, but it also complicates the research process for the research community.

If you’re a researcher and you’re looking for a very specific person who you know gave testimony, it’s much harder to locate them.

Can’t just search for their last name and find them.

So that’s an example of things that might seem sort of counterintuitive.

We did this, though, to protect the survivors.

And what we saw was our first ethical obligation.

And then we have the obligation to the research community, which comes second.

And that’s also a little bit unusual for an organization such as ours.

But you had a question about beyond this sort of access, what the access sites were or how they worked.

So the access sites are mostly universities and research institutions.

So Holocaust museums all over the world, South America, North America, Israel, Europe.

We even have an open access site in Japan.

And the access sites sign a memorandum of agreement that clearly states what they will and what they can and cannot do with the collection.

They provide us with their IP ranges.

So we restrict the collection to an IP whitelist of all of the IP ranges at these institutions.

So you either have to be on campus to watch the testimonies or you have to use a VPN that you only students and faculty will have.

Everyone has to register in Aviary, our access and discovery system.

And that was one of the, when we helped develop this Aviary, that was one of our major requirements was that we would have some ability to control who sees what, when, where, and how.

And so we force everyone to register in our collection and ask for permission to view testimonies before they’re given sort of free access to everything.

And so it’s a very protective model.

In some ways it seems to, I would guess, be in tension with the way a lot of other libraries and archives work where you want to have the anonymity of the user is just as important as the materials that they’re using.

But because we have this, such sensitive materials in this collection, we felt we needed some extra level of control and protection.

Chris Lacinak: 48:41

Relative to what you described earlier, folks had to come to New Haven.

I mean, it’s hugely opened things up.

That’s been a major transformation in that regard, it sounds like.

Among the users, you have proactively been a big user.

You’ve been extraordinarily prolific.

I mean, you’ve talked about not just in the creation of co-creating of Aviary, but you’ve also created a podcast, I believe, from the collections.

You’ve done an album, which you pressed on vinyl, which was not from the testimonies, but was related to the testimonies.

You have these fellowship programs, you do speaker series, you do film series, you do all sorts of stuff.

Can you talk a little bit about, maybe, you know, there’s a lot to talk about there.

You don’t have to go through each one, but maybe tell us about the podcast and the album that you did.

I’d love to hear a little bit more about that, or if there’s any other of those that you’d really like to highlight.

Stephen Naron: 49:35

In interest in various historical topics, there’s always a kind of ebb and flow, right?

And so, I think, to a certain extent, there can be a sort of complacency about, well, this is an amazing collection, without a doubt.

Researchers will come to us.

But I think that times have changed, and that the research community now expects you to sort of come to them.

And that’s a real fundamental shift in the way we think.

And yeah, as you mentioned, we have the fellowship program.

We have a film grant project where we provide a grant to a filmmaker in residence who then creates a short edited program based on testimonies from the collection.

We have a lot of events and conferences that we support that are designed to sort of lift up the collection in both the public eye, but also among the research community.

We’ve done our own productions based on the testimony.

So the podcast series is already in its third season.

We’re planning to do a fourth season.

And this podcast series is really just, again, like I said, we’ve always sort of embraced new methods and new technologies.

And this really just seemed like the ideal way to bring audiovisual material to a new listenership, to the non-research listenership.

I’m obviously a big fan of podcasts, and I’ve been listening to a number of podcasts that were based on oral history collections.

And there’s one in particular that I stumbled upon called Making Gay History, which is based on the oral histories that Eric Marcus recorded with leading figures in the LGBTQ community.

And I don’t know a lot about this topic.

This is not an area that I know a lot about.

And I found it one of the most compelling podcasts I’ve ever heard based on these archival recordings.

And I said, “Okay, well, we should do something like this.”

And so I asked Eric Marcus if he’d be willing to help produce a series for us.

And he also just happens to be a nice Jewish boy from New York.

And so he agreed and found a team to support him, another co-producer, Nahanni Rous.

And they’ve been producing edited versions of the testimonies in podcast form now for three seasons with quite a bit of success.

You know, over 100,000 downloads and streams on Spotify.

And so these are listeners that would probably never stumble into Aviary at an access site and use the collection that way.

They might find some of our edited programs on our website or on YouTube, but this is just another way to push these voices out into the public.

Chris Lacinak: 52:59

And that podcast for listeners is “Those Who Were There” is the name of that podcast, right?

Stephen Naron: 53:03

Yeah, “Those Who Were There.”

The latest…

And if you go to our website or Google “Those Who Were There,” you’ll find it.

You can listen to it on the website as well as on all your podcast apps.

But the website has a lot of other additional information, including episode notes for each episode that are written by a renowned scholar, Professor Sam Kassow, who provides additional context about each episode, which is really valuable, and further readings.

This that we’ve gotten from the family’s scanned images from family archives.

So it’s a really…

I think it’s, you know, on the one hand, it’s a little strange because you’re taking a video testimony and removing the video and making it into audio in order to do this.

So it feels like you’re losing something, obviously, in this transformation.

But you also gain something because as you know, if you listen to podcasts, you know, when it’s just you and a pair of headphones and you’re walking down the street listening to a podcast, you just sort of disappear into your head and it’s very intimate as well.

So I think it’s appropriate, although there is something lost and something gained.

And then you said the songs project.

So that’s called “Songs from Testimonies.”

It’s also available on our website.

And that’s really a…

It really started as a kind of traditional research project.

So one of our fellows, Sarah Garibova, discovered some really unusual songs that were sung in a testimony that we’d never heard before when she was creating her critical edition.

And we found the song so compelling that we asked a local ethnomusicologist in New York and a musician himself to come and perform the songs at a conference as a sort of act of commemoration.

And we were just blown away by the results and thought that we need to do more of this.

And so it became both an ethnomusicological research project, but also a performative project.

So Zisl Slepovitch is our musician in residence, and he’s moved through the collection, locating testimonies with song, sometimes fragmentary songs that were interwar songs, religious songs, songs that were written in ghettos and camps that may be very well-known, but may also be completely unknown.

And he’s done the research, and then he’s performed these songs.

He’s created his own notation or his own composition for each of the songs and performed them.

And we’ve recorded them with an ensemble, and they’re now available for listening.

And there’s been concerts.

We’ve performed the songs several times in concert with the context, showing excerpts from the testimonies.

Where does the song come from?

Explaining how the song emerges and the meaning of the lyrics.

And yeah, so it’s a research project.

It’s a performance project.

It’s a commemorative project.

It’s also a really valuable learning tool.

It’s a way for the general public to enter into a difficult topic and learn a lot about testimony.

So it’s been a pretty rewarding project.

Chris Lacinak: 56:30

Such a beautiful story.

I love that.

And I also know that you pressed it on vinyl as well, didn’t you?

Stephen Naron: 56:39

Yeah, well, because I’m a music nerd.

So this was…

Well, and I mean, also, I’m an archivist, and vinyl lasts a really long time.

So my thought was that if we press it on vinyl, it will last longer if we do it on CD.

We also do it on CD, and it’s available in all the streaming services as well.

But it is a work of art.

We had a local letterpress artist, Jeff Mueller, who runs Dexterity Press.

He printed each of the sleeves by hand.

And they were designed by this incredible Belarusian artist, Yulia Ruditskaya.

And she did all the design work.

She actually created an animated film around one of the songs as well.

There’s more information.

She was one of our Vlock fellows.

It’s on our website as well, the Filmmaker in Residence Fellowship.

So yeah, it’s a really interesting project.

And I’ve learned a lot about the value of music as a historical source through this effort.

But also the music itself is just quite beautiful.

These are world-class musicians performing these pieces.

It’s really something to listen to.

Chris Lacinak: 58:01

So I’d like to circle back to the discussion around the other Holocaust testimony archives and collections that exist out in the world.

To someone that’s an outsider to the nitty-gritty details of all that, and you gave us some good insights into what some of the variances and variables are there.

But it would seem that as a naive user who is interested in researching Holocaust testimonies, that I might be able to go to a single place and search across all of these various collections, or at least a number of them.

Does that exist?

Is that in the works?

Is there discussions amongst the various entities that hold and manage collections?

Stephen Naron: 58:48

Well, what I would say to that naive researcher is, there absolutely should be something like that.

And it is a shanda that there isn’t.

And yeah, there are discussions about how to make that possible.

And there have been some small attempts.

But at this point, I think my description as well of the different organizations and their different sort of policies around access also point to the underlying problem here, which is that all of these organizations are unique individual organizations with policies and procedures and politics that can prevent them from playing nicely with one another.

And I certainly include the Fortunoff Video Archive.

We’re not any– I’m not excluding us from this, right?

So it’s not about the technology.

The technology is very much there to make it possible for a sort of single search across testimony collections that would reveal results for the research community.

And I think it absolutely has to be the next step.

And not just for the research community, but for the families.

One of the most infuriating things, I think, for children and grandchildren of survivors is they don’t know where their grandparents’ testimony is.

Which archive is it in?

They have no simple way to find it.

And that seems to me to be a major disservice to the families of the survivors who, at great emotional risk, gave us their testimony.

So we really need to find a way to do that.

And we need to work together across organizations to make that happen.

US Holocaust Memorial Museum has also made some really important inroads in this regard.

They have something called Collection Search, where they’ve added metadata from the USC Shoah Foundation, their metadata, and the Fortunoff Video Archives metadata, since they have access to our collection on site at USHMM, into their collection search.

So that’s the first search engine I’ve seen where you can actually search across USHMM, USC, and Fortunoff and find testimonies that are related.

And we’re also doing it in Aviary to a certain extent.

So in Aviary, we’ve got a couple of different organizations with testimonies that have joined together to create what’s called in Aviary a Flock.

And so it’s a way to search across.

It’s like a portal that can search across different collections in Aviary.

ings of survivors recorded in: 1946

And a number of other organizations that have audio and video testimonies in Aviary, and you can search across those as a collective.

And so there are plenty of examples of this working.

We’ve also got a, we formed a digital humanities project that brought together transcripts over a three, I think 3,000 testimony transcripts of survivor testimony from Fortunoff, USHMM, and USC Shoah Foundation, and a project called Let Them Speak.

And you can search across the transcripts of all those collections.

And that’s pretty, that’s also a step, again, another example of what would be possible.

Imagine a world in which everybody just finally shared their testimonies.

So we have a lot of examples of how this works and the benefits of it, but we don’t have like a, we don’t have, it’s almost like we need an umbrella organization that would pull all of these disparate groups together and make them agree on how to share metadata in a way that everyone can have access to it.

Chris Lacinak: 62:51

Right.

Stephen Naron: 62:52

We’re not there yet.

Chris Lacinak: 62:53

Yeah.

Okay.

So some glimmers of hope, but not quite there yet.

Stephen Naron: 62:56

Yeah.

Chris Lacinak: 62:57

Switching gears, I want to ask a question.

I recently had Bert Lyons on the show and we talked about content authenticity.

And I guess I wonder, I mean, this is an issue for every archive, but given the focused efforts around Holocaust denial and things like that, I wonder how you’re thinking about the prospect of fakes and forgeries in the age of AI when, you know, it’s not a new issue.

Fakes and forgeries have been issues for archives for as long as archives have been around, but just the ability and capability of people to create content now to support false narratives and cause issues for archives like yourself.

I wonder, is that something that’s getting talked about within Holocaust testimony circles or is that still on the horizon?

Stephen Naron: 63:54

As technology improves or changes and is more sophisticated and these AI tools become more sophisticated, yes, certainly that’s a new risk, but there are also new technologies and tools to identify things that are fake.

So the technology brings with it new types of artifacts and ways to see whether or not this is testing the authenticity of a digital object.

I’m sure that’s way beyond my, I can’t really talk about that because that’s beyond my field of expertise.

But in my area, I mean, really the more dangerous thing instead of like outright denial, which has always existed but is really limited to the margins, is something that you’ve seen more and more of, which is not outright denial, but a kind of half-truth or willful manipulation of the facts to sort of, it’s like denial light.

It’s bad history being sort of marketed as authentic history in order to pursue a particular ideological or political end, right?

So you see this a lot in, not to pick on anyone in particular, but in certain regimes in Europe that have been considered more, have taken a sort of more populist, authoritarian turn, there have been quite obvious attempts to replace traditional independent scholarship with scholars who are being sort of controlled, funded, supported by the state and the government in a way and sort of asked to willfully, willingly misrepresent the truth, right?

So they still cite historical sources, but they cite them in a way that would not be sort of attempted objective historical writing, right?

In order to tell a story that is inaccurate, let’s say that Polish citizens were not complicit in the Holocaust and every Polish village was filled with individuals who were willing to hide and save Jews from extinction.

These types of sort of exaggerations and misrepresentations of sources, that’s becoming a much greater threat than outright denial.

Also because it’s difficult, because the way it’s shaped, it looks like scholarship, looks like research, it’s presented from official organizations that just happen to be corrupted.

And so that becomes much more of a difficult thing to push back on, but you can and scholars do that and that’s exactly what good scholars do is they push back on this stuff.

But yeah, the AI, considering this is an audiovisual collection exclusively at the Fortunoffe archive, it seems pretty frightening what would be possible.

Chris Lacinak: 67:45

Right.

Well, first point well taken.

I mean, it sounds like let’s not focus too much on the nitty gritty of AI at the sacrifice of recognizing the larger issues, which are much broader than that.

So I really hear what you say there and appreciate those comments.

Here’s one of the things I think about, I mean, the kind of quick scenario you threw out was like someone creates something fake and their tools to identify things as fake.

And that’s true.

I think what’s almost more worrisome for me, and I think that every archive will need to kind of arm themselves with, and there are technologies to do this, at least if not today, then on the near horizon, but is to be able to combat claims of things that are authentic, that are held within an archive, which people claim are fake, and they have to prove that they’re authentic.

Right?

Like that is when people start to cast doubt about authentic things being fake, that’s almost more worrisome to me than someone creating something fake and having to prove that it’s fake or saying that it’s authentic.

Stephen Naron: 68:58

Yeah, absolutely.

And that sort of reminds me of the same kind of bad history that I was trying to describe, like these sort of willful manipulation of the sources that exist and claiming they’re either inauthentic or sort of misrepresenting, misquoting them or quoting them selectively in order to make an argument that’s unsound.

I mean, that’s absolutely true.

That seems like a tactic that could be used.

I mean, at the Fortunoff Video Archive, we can at least point to a chain of, you know, a provenance chain that takes us all the way back to the original master recordings, which are still in cold storage at, you know, in New Haven, right?

So actually I think they’re in Hamden at our storage facility there.

Chris Lacinak: 69:55

For those New Haven geography buffs.

Stephen Naron: 69:59

Yeah, I didn’t want anyone to, it’s not fair.

It’s in Hamden.

But yeah, so I mean, we have a chain that we can then show the sort of authentic steps that were taken.

And even in the digitization process, there was great care given to the sort of SAMMA systems document the whole digitization process.

And so what’s happening as the signal sort of changes over time.

And so you also have a pretty, you have like a record of the actual transfer and can show if there’s been interruptions or not, lack of interruptions and things like that.

So that’s a pretty detailed level of authenticity control.

Chris Lacinak: 70:43

So Stephen, one of the things that I want to do with this podcast is to back up out of the weeds and reflect on why the work that we do is important to remind ourselves to rejuvenate on purpose and meaning of this work.

And with that in mind, I wonder if you could reflect on the importance and the why behind the Fortunoff Video Archives work.

Why is it important?

Stephen Naron: 71:10

Well I think that it’s important for a couple of reasons.

I’ll just give you three.

Well first of all, the Holocaust is quite possibly the greatest crime committed in the 20th century and one of the greatest crimes in history.

And as such, the brutality of the Holocaust has really impacted our society on so many levels.

So from a kind of universal perspective, we’re still very much living in a world that was shaped by the impact of the Holocaust and the Second World War.

Our belief in these ideas of universal human rights, etc., and of course our inability to always adequately support the regime of human rights internationally, this is directly related to the events of the Holocaust.

And so if you really want to understand the world in which we’re living today, you cannot do so without approaching the history of the Holocaust.

And the history of the Holocaust needs to be approached by every generation in a new way.

And having an archive such as this is one of the best ways, working and engaging with an archive such as this is really one of the best ways to approach this topic.

It’s also important, and the work we do is important, because I think the archive is something of a living memorial to those who did not survive, right?

So the survivors themselves are really the anomalies.

They’re the lucky ones.

And the vast majority of European Jewry was murdered, 6 million men, women, and children.

And so I really see this archive as a sort of living memorial to both the survivors and those who did not survive, their families who did not make it.

And so the archive can serve as a bridge between the living, us, and the dead.

And in fact, as time progresses, and we’re beginning to reach an era where there will no longer be any living witnesses of the Holocaust, due to just simply demographic change, the archives, and archives like this one of testimonies of Holocaust survivors will only become that much more important.

It will be the only way in which we can really engage with personal stories of the witnesses.

Only diaries and memoirs and testimonies like this can give us access to what it felt like to be there in the war, in the camps, in the ghettos, and to have survived.

And then I think the work we do is important as, first of all, as an act of solidarity with the survivors and witnesses themselves.

And as an act of solidarity, it really has served as a model for what I would call an ethical and empathic approach to documenting the history of mass violence from the perspective of those who were there, the witnesses, right?

So a bottom-up perspective.

And it has served as a model, and it continues to serve as a model for lots of organizations who do the type of important work of documenting human rights and civil rights abuses.

So yeah, so those are just three ways I think that the collection really is a, continues to have an impact and is really an important organization.

Chris Lacinak: 75:04

Steven, thanks so much for joining me today.

It’s been extraordinarily enlightening.

I want to thank you for your work that you do, and it’s just been an amazing, it’s been amazing to hear about the journey of this incredible collection and archive.

So thank you for sharing with us today.

In closing, I want to ask you a question that I ask all of my guests on the DAM Right podcast, which is totally separate from anything we’ve talked about so far today, which is, what’s the last song you added to your favorites playlist or liked?

Stephen Naron: 75:39

The last song I added to my playlist.

Well, I guess I have to stay true to the archives and maybe not be entirely honest and say that one of the last songs I put on my playlist was from the volume three of our Songs from Testimonies project, which is called “Shotns or Shadows.”

And it would be the title track, “Shotns,” which is a Yiddish song.

That’s in my playlist.

And I hope you all listen to it too.

Chris Lacinak: 76:17

Okay, we’ll share the links to that in the show notes.

Can you tell us what the actual last song you put in your playlist was?

Stephen Naron: 76:24

It’s actually, you know, usually it’s whole albums.

I put whole albums in my playlist.

Is a Greek avant-garde musician named Savina Yannatou, who I stumbled upon.

Yeah, the song is called something in Greek, which I will not mispronounce for your audience.

Chris Lacinak: 76:48

I’ll get the link from you so we can share it with everybody.

Wonderful.

All right, well, Stephen, thank you so much.

You’ve been extremely generous with your time and all your insights.

Thank you very much.

I appreciate you taking the time.

Stephen Naron: 76:59

No problem.

Thank you, Chris.

Chris Lacinak: 77:00

Thanks for listening to the DAM Right podcast.

If you have ideas on topics you want to hear about, people you’d like to hear interviewed, or events that you’d like to see covered, drop us a line at [email protected] and let us know.

We would love your feedback.

Speaking of feedback, please give us a rating on your platform of choice.

And while you’re at it, make sure that you don’t miss an episode by following or subscribing.

You can also stay up to date with me and the DAM Right podcast by following me on LinkedIn at linkedin.com/in/clacinak.

And finally, go and find some really amazing and free resources from the best DAM consultants in the business at weareavp.com/free-resources.

You’ll find things like our DAM Strategy Canvas, DAM Health Scorecard, and the Get Your DAM Budget slide deck template.

Each resource has a free accompanying guide to help you put it to use.

So go and get them now.

The Critical Role of Content Authenticity in Digital Asset Management

11 April 2024

The question of content authenticity has never been more urgent. Digital media has proliferated, and advanced technologies like AI have emerged. Distinguishing genuine content from manipulated material is now crucial in many industries. This blog examines content authenticity, its importance in Digital Asset Management (DAM), and current initiatives addressing these challenges.

Understanding Content Authenticity

Content authenticity means verifying that digital content is genuine and unaltered. This issue isn’t new, but modern technology has intensified the challenges. For example, the FBI seized over twenty-five paintings from the Orlando Museum of Art, demonstrating the difficulty of authenticating artworks. Historical cases, like the fabricated “Protocols of the Elders of Zion,” reveal the severe consequences of misinformation. Digital content’s ubiquity makes it vital for organizations to verify authenticity. Without proper measures, content may remain untrustworthy.

The Emergence of New Challenges

Digital content production has skyrocketed in the last decade. Social media rapidly disseminates information, often without verification. Generative AI tools create highly realistic synthetic content, complicating the line between reality and fabrication. Deepfakes can simulate real people, raising serious concerns about misinformation. Organizations must combine technology with human oversight to navigate this complex environment.

The Role of Technology in Content Authenticity

Technology provides tools to detect and address authenticity challenges. Yet, technology alone isn’t enough. Human expertise must complement these solutions. The Content Authenticity Initiative (CAI), led by Adobe, is one effort creating standards for embedding provenance data in digital content. The Coalition for Content Provenance and Authenticity (C2PA) also works to embed trust signals into digital files. These efforts enhance content verification and authenticity.

Practical Applications of Content Authenticity in DAM

For organizations managing digital assets, content authenticity is crucial. DAM systems benefit from integrating authenticity protocols. Several practical applications include:

- Collection Development: Authentication techniques help evaluate incoming digital assets.

- Quality Control: Authenticity measures verify file integrity during digitization projects.

- Preservation: Provenance data embedded in files ensures long-term reliability.

- Copyright Protection: Content credentials protect assets when shared externally.

- Efficiency Gains: Automating authenticity data reduces manual errors.

The Risks of Neglecting Content Authenticity

Neglecting content authenticity poses significant risks. Misinformation spreads quickly, damaging brands and eroding public trust. Sharing manipulated content can lead to legal issues and financial losses. Ignoring authenticity can have severe consequences, including reputational and legal liabilities.

Collaboration and the Future of Content Authenticity

Collaboration is vital for achieving content authenticity. Organizations, technology providers, and stakeholders must develop best practices together. The rapidly evolving digital landscape demands ongoing innovation. Investing in authenticity technologies and frameworks will become essential.

Case Studies: Content Authenticity in Action

Organizations are already implementing successful authenticity measures. Media outlets verify user-generated videos and images with specialized tools. Human rights organizations embed authenticity data into witness-captured files, ensuring credibility in court. Museums and archives verify digital assets’ provenance, preserving their integrity.

Conclusion: The Imperative for Content Authenticity

Content authenticity is a societal necessity, not just a technical issue. As digital content grows, verifying authenticity will be vital for maintaining trust. Organizations that prioritize content authenticity will navigate the digital age more effectively. Collaboration and technology will ensure digital assets remain credible, trustworthy, and protected.

Transcript

Chris Lacinak: 00:00