The proof is in the standards pudding

June 21, 2016

There are a lot of numbers in this post. This song by Kraftwerk might be useful: “Pocket Calculator.”

We talk a lot about assessment in the digital preservation community. It’s an important part of building strong repositories—it helps to identify gaps in practice, find opportunities for growth, and, what we all strive for, spotlight strengths in our approaches to trustworthy digital repository management. We’re lucky that there are a few tools available to our community that we can use to assess our digital preservation readiness, and in due course, firm up the foundation of our digital preservation systems.

Deciding which tools to use and approaches to take to perform assessment can be a difficult decision. There are complex standards, like ISO 16363:2012—Audit and certification of trustworthy digital repositories (16363), more lightweight technology and infrastructure guidance like NDSA’s Levels of Digital Preservation (LoDP), internally devised audits, and others[1]. Each approach and tool is valued in our digital preservation arsenal, but deciding which one makes the most sense for one’s institution at a given time and place can be difficult to determine. Deciding factors might include ease of use, or that a tool takes a holistic approach to the organization, or alternatively, is focused on one key function in the digital preservation process. Another important factor is whether or not they hold up against recognized standards. Without data to support assertions that an assessment tool is effective, choosing one can be challenging. So, in 2014 AVPreserve set out to investigate how well LoDP, while widely recognized as a helpful assessment tool, aligns with the International Standard Organization’s 16363.

The LoDP framework and ISO 16363 standard offer overlapping yet distinct areas of analysis, which are useful, but result in differing reporting classifications and outcomes that are not easy to reconcile. 16363 is a holistic view of digital preservation based on the OAIS framework, taking into consideration organizational infrastructure, digital object management, and information and security risk management. In order to encourage the use of both the tool and standard, AVPreserve staff mapped LoDP categories to the 16363 requirements.

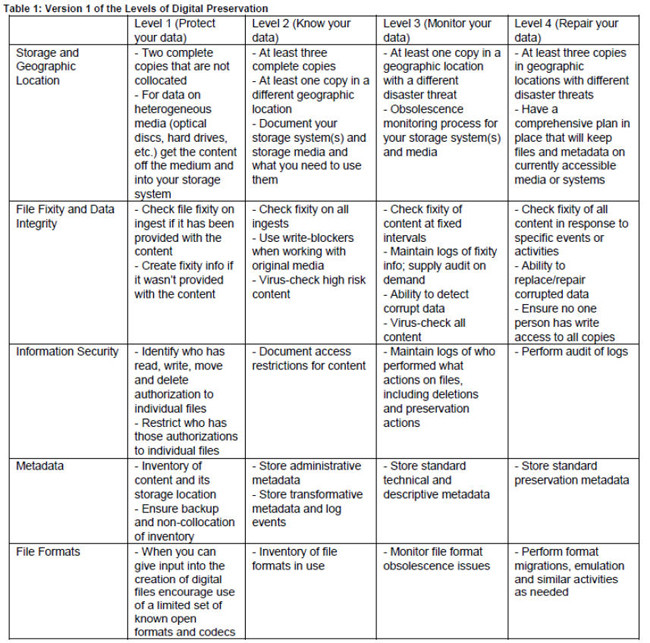

The first step was to assess which of the LoDP categories aligned with the 109 16363 metrics. This is challenging because the 16363 metrics are quite specific (e.g., “4.2.4.1.1 – The repository shall have unique identifiers”), while the LoDP categories are purposefully general. The five LoDP categories are:

- Storage and Geographic Location

- File Fixity and Data Integrity

- Information Security

- Metadata

- File formats

Each LoDP category has a number of criteria—”File Fixity and Data Integrity,” for example, has 12 separate criteria that must be addressed as an institution moves up the levels of digital preservation readiness from least ready (level one) to most ready (level four). The Levels of Digital Preservation chart shows which criteria are associated with which categories. (Criteria are indicated by a dash [“-”].)

National Digital Stewardship Alliance Levels of Digital Preservation.

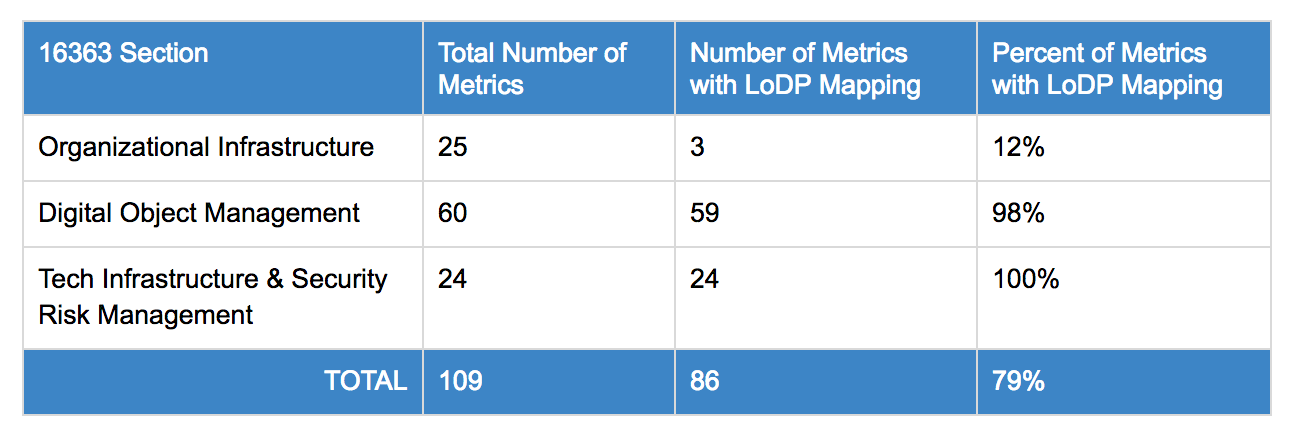

National Digital Stewardship Alliance Levels of Digital Preservation. The difficulty and high bar of doing an internal assessment as a Trusted Digital Repository has created a hurdle to the ability of organizations to track or rank their progress towards digital preservation standards. This is one of the factors that sparked the initial LoDP to 16363 mapping. In total, LoDP is comprised of 36 separate criteria that were mapped to 16363. This initial mapping found that 86 of the 109 metrics in 16363 related to at least one of the LoDP categories based on those 36 criteria.

I have taken this process a step further to think about the implications of the frequency of the five LoDP category mappings to the metrics in 16363. It is important to remember that LoDP addresses infrastructure and technology and not “the robustness of digital preservation programs as a whole, since it does not cover such things as policies, staffing, or organizational support,”[2] whereas 16363 does both. While these organizational factors are hugely important when approaching a digital preservation program from a holistic perspective, they are not the focus of the LoDP tool. So, the two assessment tools will never completely align.

The overlap that does occur mostly comes from sections four and five of 16363, “Digital Object Management” and “Information and Security Risk Management,” respectively. If you are looking for metrics that address technology infrastructure, you would go to these two sections of the standard. So, it makes sense that LoDP aligns with them.

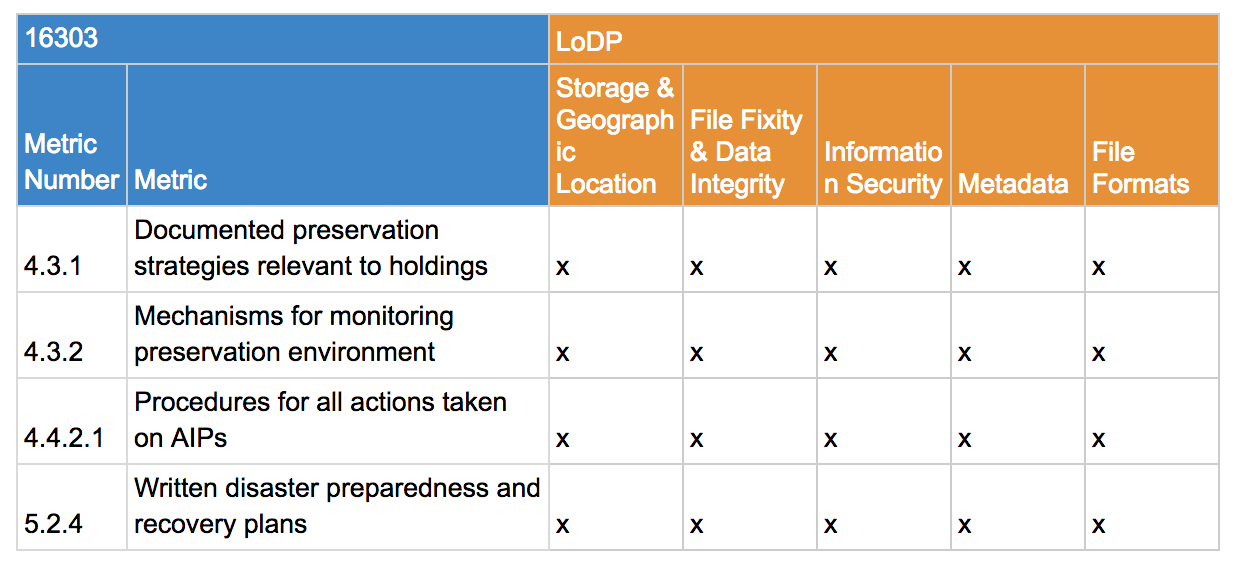

For four 16363 metrics, all five of the LoDP categories map:

These four metrics “score” highest (i.e., the most LoDP categories map to them) because they address things like documenting preservation strategies, the mechanisms necessary for managing the preservation environment, disaster preparedness, and “all actions taken on AIPs.” They are some of the most general of the 16363 metrics, so logically, more of the LoDP’s 36 criteria can be applied to them. That’s much the same case with the five 16363 metrics in which four of the LoDP categories map, below.

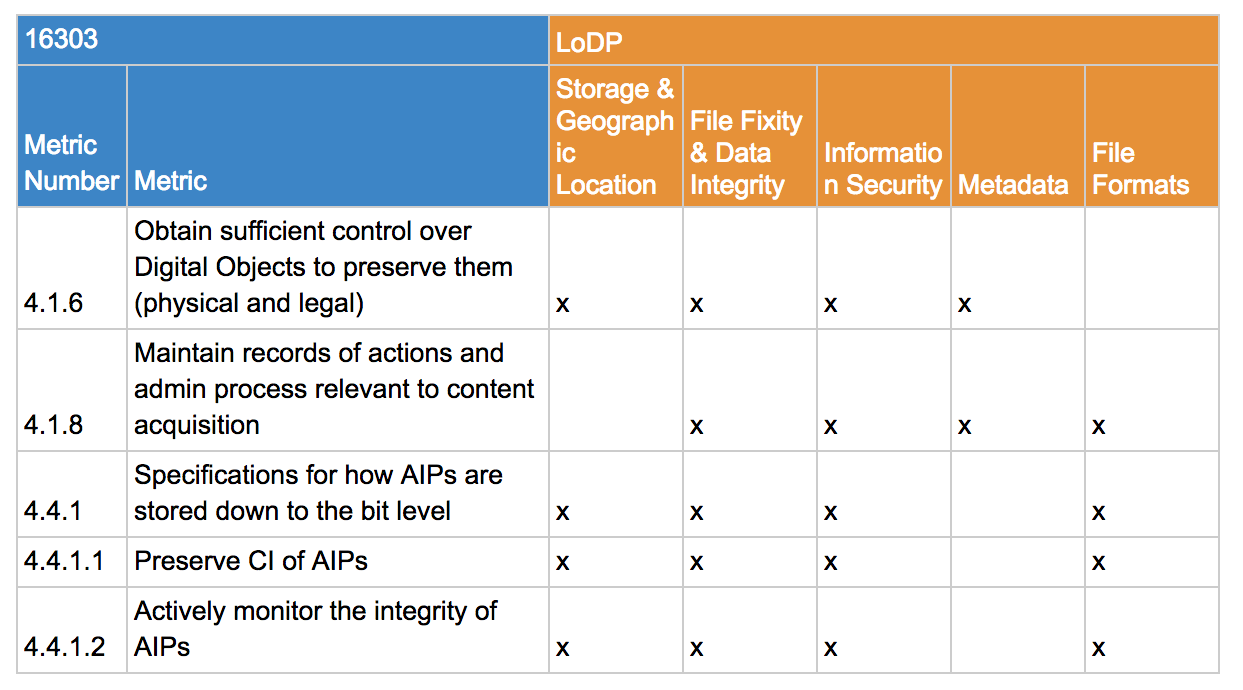

In the case of 4.1.6, for example, obtaining control over Digital Objects (defined in ISO 14721 as “An object composed of a set of bit sequences”[3]) involves managing where the bits are stored, verifying the actions taken on them, ensuring that the bits are secure, and having the right metadata to track it all. “File Formats” is not mapped to 4.1.6 because the metric is format agnostic—it refers only to a set of bits and their management. Those bits could be compiled as TIFFs or WAVs; this is about the actions required to control them—whatever “them” is.

Another example is metrics 4.4.1 through 4.4.1.2, which are hierarchical, and so, to some degree, address the same issues. In this case, they relate to AIPs and their specifications, the preservation of their Content Information (or, “CI,” defined as “A set of information that is the original target of preservation or that includes part or all of that information”[4]), and their integrity. These metrics are wholly technical in nature, relating, like in 4.1.6, to the bits. But, unlike 4.1.6, they address this Content Information piece, as well. The difference is big—the CI contains specifications that must be known in order to be able to render the file—the instructions that say how a JPEG is a JPEG. So, logically, the LoDP category “File Formats” maps to this metric.

What isn’t relevant to these particular metrics is metadata. Nothing is being described or logged here—the AIPs are being monitored in 4.4.1.2, but in true 16363 every-function-is-its-own-metric form, the act of logging the activity is addressed elsewhere. In this case, documenting the monitoring process is covered in the next metric in the list, 4.4.2, which reads: “The repository shall have contemporaneous records of actions and administration processes that are relevant to storage and preservation of the AIPs.”

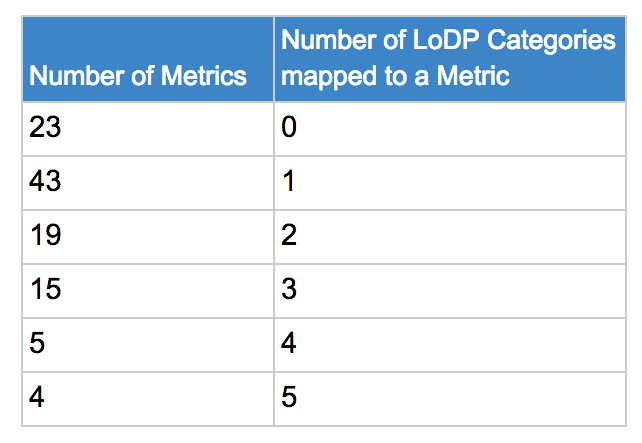

To recap, all of the metrics break down as follows:

There’s no need to go through all of the mappings in this post—you can see them in the document linked to below. But, it is important to reiterate that 79% of the 16363 metrics align with at least one of the LoDP categories. That’s a lot of overlap between an official standard and a tool widely recognized for its value in the digital preservation community, but that does not technically have the caché of “officialdom” behind it.

So what’s the point and why should we care, if LoDP is already respected and used by the digital preservation community? In short, this exercise strongly demonstrates LoDP’s value. Because so many of the 16363 metrics map to the LoDP category (86), and 16363 is an international standard, you can assert that LoDP is a trustworthy set of recommendations by which certain characteristics of digital preservation programs can be assessed. Here’s the big part: this means that an institution that may not be prepared to start with a full 16363 audit of their digital preservation program can feel confident in pursuing a smaller scale assessment of their workflows, digital systems, and infrastructure using the LoDP criteria. Using a tool like LoDP can help an organization to identify gaps that will improve the digital preservation performance of their technology, information security, fixity protocols, and file format management, and to highlight the sustainable areas of their program where they are currently achieving success. Through assessment and gap analysis based on tools like LoDP, an institution can identify, test, and implement more sustainable workflows, technologies, and—eventually with standards like 16363—organizational infrastructure.

And building more sustainable systems based on recognized standards is something we should all care about! Now for some of that standards pudding.

Note: Do you have comments or suggestions about our original LoDP-to-16363 mapping? We want to hear about it—feel free to drop us a line.

[1] See also, http://www.datasealofapproval.org/en/

[2] http://ndsa.org/activities/levels-of-digital-preservation/

[3] ISO 14721:2012 Open archival information system (OAIS) — Reference model. http://public.ccsds.org/publications/archive/650x0m2.pdf

[4] Ibid.

—Amy.